croaring bitmap

1 安装

git clone https://github.com/RoaringBitmap/CRoaring.git

cd CRoaring/

cd build/

cmake -DCMAKE_BUILD_TYPE=Debug ..

make -j4

然后就可以了

git clone https://github.com/RoaringBitmap/CRoaring.git

cd CRoaring/

cd build/

cmake -DCMAKE_BUILD_TYPE=Debug ..

make -j4

然后就可以了

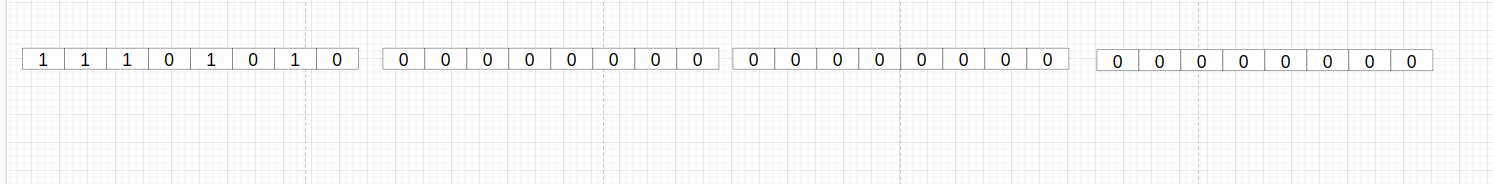

bitmap 在某个长度之后会占用内存比array小,利用这个特性,可以将数据的存储压缩成bitmap存储.

当我们有多个数字的数组,我们可以用多种方式描述一个数字.

array = [1 , 2 , 3 ,5,7] (1)

假设每个数字是一个Uint32 ,也就是4字节的数字.

那么上面例子(1) 中占用的字节数:5*4 = 20 字节 , 如果我们要存越大的数据需要的内存越多.我们占用的内存是线性的

memory = array.size() * 4

优点:

uint32 num = 0 ;

num |= num << 1 ;

num |= num << 2 ;

num |= num << 3 ;

num |= num << 5 ;

num |= num << 7 ;

那么可以存多少个数字呢?

4*8 = 32 也就是可以存32个数字

优点:

缺点

Uint的数字2^32个数只使用bitmap

如果要用bitmap来存,我们要用 2^32 / 8 = 2^29 byte = 256m

只使用array

需要的内存: 4*array.length byte

bitmap和array的区别: bitmap会固定占用的内存,array则是动态占用内存。 上面的例子(存储4字节长度的数字数组),bitmap会固定占用256m,而array则动态长度。在数组比较小的时候,使用array比较好,在数组长度比较大的时候,则使用bitmap比较好。

核心公式: number_length * n * 8 = 2^n

这里解释一下公式长度: number_length 描述的是一个数字的字节长度,比如要存储的是uint32 , 则number_length = 4 , 如果要存储uint64 ,则number_length = 8

4 *n*8 = 2^n 的解是16

所以用16个字节来描述一个联合体:{bitmap , array} , 当数组数量小�于16的时候使用array 存储,当数量大于等于16的时候使用bitmap描述

参考相关代码:

CRoaring/include/roaring/containers/containers.h

相关阅读:

A lease is a contract that gives its holder specified rights over property for a limited period of time. In the context of caching, a lease grants to its holder control over writes to the covered datum during the term of the lease, such that the server must obtain the approval of the leaseholder before the datum may be written. When a leaseholder grants approval for a write, it invalidates its local copy of the da 租约是一个租约持有人在一定时间内有特别的权限的合约. 在缓存这个场景下,租约保证他的持有人在租约期限内有写的权限 , 所以当服务器

rabbitmq-server/deps/rabbit/src/rabbit_msg_store.erl

%% Message store is responsible for storing messages

%% on disk and loading them back. The store handles both

%% persistent messages and transient ones (when a node

%% is under RAM pressure and needs to page messages out

%% to disk). The store is responsible for locating messages

%% on disk and maintaining an index.

消息存储是响应式地存储在硬盘或者把他们从硬盘加载到内存.存储的例程回调包括持久化的消息和非持久化的消息(当使用内存高出一定阈值,会把消息调入到硬盘).

这个存储模块会返回消息在硬盘的偏移,以及维持这个消息到硬盘的映射的索引.

%% There are two message stores per node: one for transient

%% and one for persistent messages.

每个节点有两种消息:

一个是对易失的消息 , 一个是持久化的消息

%%

%% Queue processes interact with the stores via clients.

队列进程和存储模块通过客户端交互

%% The components:

%%

%% Index: this is a mapping from MsgId to #msg_location{}.

%% By default, it's in ETS, but other implementations can

%% be used.

%% FileSummary: this maps File to #file_summary{} and is stored

%% in ETS.

包括两个组件:

%% The basic idea is that messages are appended to the current file up

%% until that file becomes too big (> file_size_limit). At that point,

%% the file is closed and a new file is created on the _right_ of the

%% old file which is used for new messages. Files are named

%% numerically ascending, thus the file with the lowest name is the

%% eldest file.

基本的思路是将消息加入到文件里面,直到文件变得足够大。在这个时候,会将文件关闭,

然后创建一个新的文件添加到旧有文件的右边。文件名会升序�命名,因此文件名数字比较

小的就是比较旧的文件。

%% We need to keep track of which messages are in which files (this is

%% the index); how much useful data is in each file and which files

%% are on the left and right of each other. This is the purpose of the

%% file summary ETS table.

我们需要确定消息在哪个文件(这就是一个索引的功能); 还有多少数据是有效在每个文

件以及每个文件的排序.这个的目的是为了确定表的统计.

%% As messages are removed from files, holes appear in these

%% files. The field ValidTotalSize contains the total amount of useful

%% data left in the file. This is needed for garbage collection.

当消息被从文件中移除,文件会出现空洞.ValidTotalSize字段会比有用的数据小,这需

要垃圾回收.

%% When we discover that a file is now empty, we delete it. When we

%% discover that it can be combined with the useful data in either its

%% left or right neighbour, and overall, across all the files, we have

%% ((the amount of garbage) / (the sum of all file sizes)) >

%% ?GARBAGE_FRACTION, we start a garbage collection run concurrently,

%% which will compact the two files together.

当发现文件是空,我们会删除他.当我们发现文件可以和自己的左右邻居合并

当garbage 数量与所有文件大

小的比例超过一定阈值,会开始垃圾回收

This keeps disk

%% utilisation high and aids performance. We deliberately do this

%% lazily in order to prevent doing GC on files which are soon to be

%% emptied (and hence deleted).

%% Given the compaction between two files, the left file (i.e. elder

%% file) is considered the ultimate destination for the good data in

%% the right file. If necessary, the good data in the left file which

%% is fragmented throughout the file is written out to a temporary

%% file, then read back in to form a contiguous chunk of good data at

%% the start of the left file. Thus the left file is garbage collected

%% and compacted. Then the good data from the right file is copied

%% onto the end of the left file. Index and file summary tables are

%% updated.

%%

%% On non-clean startup, we scan the files we discover, dealing with

%% the possibilities of a crash having occurred during a compaction

%% (this consists of tidyup - the compaction is deliberately designed

%% such that data is duplicated on disk rather than risking it being

%% lost), and rebuild the file summary and index ETS table.

%%

%% So, with this design, messages move to the left. Eventually, they

%% should end up in a contiguous block on the left and are then never

%% rewritten. But this isn't quite the case. If in a file there is one

%% message that is being ignored, for some reason, and messages in the

%% file to the right and in the current block are being read all the

%% time then it will repeatedly be the case that the good data from

%% both files can be combined and will be written out to a new

%% file. Whenever this happens, our shunned message will be rewritten.

%%

%% So, provided that we combine messages in the right order,

%% (i.e. left file, bottom to top, right file, bottom to top),

%% eventually our shunned message will end up at the bottom of the

%% left file. The compaction/combining algorithm is smart enough to

%% read in good data from the left file that is scattered throughout

%% (i.e. C and D in the below diagram), then truncate the file to just

%% above B (i.e. truncate to the limit of the good contiguous region

%% at the start of the file), then write C and D on top and then write

%% E, F and G from the right file on top. Thus contiguous blocks of

%% good data at the bottom of files are not rewritten.

%%

%% +-------+ +-------+ +-------+

%% | X | | G | | G |

%% +-------+ +-------+ +-------+

%% | D | | X | | F |

%% +-------+ +-------+ +-------+

%% | X | | X | | E |

%% +-------+ +-------+ +-------+

%% | C | | F | ===> | D |

%% +-------+ +-------+ +-------+

%% | X | | X | | C |

%% +-------+ +-------+ +-------+

%% | B | | X | | B |

%% +-------+ +-------+ +-------+

%% | A | | E | | A |

%% +-------+ +-------+ +-------+

%% left right left

%%

%% From this reasoning, we do have a bound on the number of times the

%% message is rewritten. From when it is inserted, there can be no

%% files inserted between it and the head of the queue, and the worst

%% case is that every time it is rewritten, it moves one position lower

%% in the file (for it to stay at the same position requires that

%% there are no holes beneath it, which means truncate would be used

%% and so it would not be rewritten at all). Thus this seems to

%% suggest the limit is the number of messages ahead of it in the

%% queue, though it's likely that that's pessimistic, given the

%% requirements for compaction/combination of files.

%%

%% The other property that we have is the bound on the lowest

%% utilisation, which should be 50% - worst case is that all files are

%% fractionally over half full and can't be combined (equivalent is

%% alternating full files and files with only one tiny message in

%% them).

%%

%% Messages are reference-counted. When a message with the same msg id

%% is written several times we only store it once, and only remove it

%% from the store when it has been removed the same number of times.

%%

%% The reference counts do not persist. Therefore the initialisation

%% function must be provided with a generator that produces ref count

%% deltas for all recovered messages. This is only used on startup

%% when the shutdown was non-clean.

%%

%% Read messages with a reference count greater than one are entered

%% into a message cache. The purpose of the cache is not especially

%% performance, though it can help there too, but prevention of memory

%% explosion. It ensures that as messages with a high reference count

%% are read from several processes they are read back as the same

%% binary object rather than multiples of identical binary

%% objects.

%%

%% Reads can be performed directly by clients without calling to the

%% server. This is safe because multiple file handles can be used to

%% read files. However, locking is used by the concurrent GC to make

%% sure that reads are not attempted from files which are in the

%% process of being garbage collected.

%%

%% When a message is removed, its reference count is decremented. Even

%% if the reference count becomes 0, its entry is not removed. This is

%% because in the event of the same message being sent to several

%% different queues, there is the possibility of one queue writing and

%% removing the message before other queues write it at all. Thus

%% accommodating 0-reference counts allows us to avoid unnecessary

%% writes here. Of course, there are complications: the file to which

%% the message has already been written could be locked pending

%% deletion or GC, which means we have to rewrite the message as the

%% original copy will now be lost.

%%

%% The server automatically defers reads, removes and contains calls

%% that occur which refer to files which are currently being

%% GC'd. Contains calls are only deferred in order to ensure they do

%% not overtake removes.

%%

%% The current file to which messages are being written has a

%% write-back cache. This is written to immediately by clients and can

%% be read from by clients too. This means that there are only ever

%% writes made to the current file, thus eliminating delays due to

%% flushing write buffers in order to be able to safely read from the

%% current file. The one exception to this is that on start up, the

%% cache is not populated with msgs found in the current file, and

%% thus in this case only, reads may have to come from the file

%% itself. The effect of this is that even if the msg_store process is

%% heavily overloaded, clients can still write and read messages with

%% very low latency and not block at all.

%%

%% Clients of the msg_store are required to register before using the

%% msg_store. This provides them with the necessary client-side state

%% to allow them to directly access the various caches and files. When

%% they terminate, they should deregister. They can do this by calling

%% either client_terminate/1 or client_delete_and_terminate/1. The

%% differences are: (a) client_terminate is synchronous. As a result,

%% if the msg_store is badly overloaded and has lots of in-flight

%% writes and removes to process, this will take some time to

%% return. However, once it does return, you can be sure that all the

%% actions you've issued to the msg_store have been processed. (b) Not

%% only is client_delete_and_terminate/1 asynchronous, but it also

%% permits writes and subsequent removes from the current

%% (terminating) client which are still in flight to be safely

%% ignored. Thus from the point of view of the msg_store itself, and

%% all from the same client:

%%

%% (T) = termination; (WN) = write of msg N; (RN) = remove of msg N

%% --> W1, W2, W1, R1, T, W3, R2, W2, R1, R2, R3, W4 -->

%%

%% The client obviously sent T after all the other messages (up to

%% W4), but because the msg_store prioritises messages, the T can be

%% promoted and thus received early.

%%

%% Thus at the point of the msg_store receiving T, we have messages 1

%% and 2 with a refcount of 1. After T, W3 will be ignored because

%% it's an unknown message, as will R3, and W4. W2, R1 and R2 won't be

%% ignored because the messages that they refer to were already known

%% to the msg_store prior to T. However, it can be a little more

%% complex: after the first R2, the refcount of msg 2 is 0. At that

%% point, if a GC occurs or file deletion, msg 2 could vanish, which

%% would then mean that the subsequent W2 and R2 are then ignored.

%%

%% The use case then for client_delete_and_terminate/1 is if the

%% client wishes to remove everything it's written to the msg_store:

%% it issues removes for all messages it's written and not removed,

%% and then calls client_delete_and_terminate/1. At that point, any

%% in-flight writes (and subsequent removes) can be ignored, but

%% removes and writes for messages the msg_store already knows about

%% will continue to be processed normally (which will normally just

%% involve modifying the reference count, which is fast). Thus we save

%% disk bandwidth for writes which are going to be immediately removed

%% again by the the terminating client.

%%

%% We use a separate set to keep track of the dying clients in order

%% to keep that set, which is inspected on every write and remove, as

%% small as possible. Inspecting the set of all clients would degrade

%% performance with many healthy clients and few, if any, dying

%% clients, which is the typical case.

%%

%% Client termination messages are stored in a separate ets index to

%% avoid filling primary message store index and message files with

%% client termination messages.

%%

%% When the msg_store has a backlog (i.e. it has unprocessed messages

%% in its mailbox / gen_server priority queue), a further optimisation

%% opportunity arises: we can eliminate pairs of 'write' and 'remove'

%% from the same client for the same message. A typical occurrence of

%% these is when an empty durable queue delivers persistent messages

%% to ack'ing consumers. The queue will asynchronously ask the

%% msg_store to 'write' such messages, and when they are acknowledged

%% it will issue a 'remove'. That 'remove' may be issued before the

%% msg_store has processed the 'write'. There is then no point going

%% ahead with the processing of that 'write'.

%%

%% To detect this situation a 'flying_ets' table is shared between the

%% clients and the server. The table is keyed on the combination of

%% client (reference) and msg id, and the value represents an

%% integration of all the writes and removes currently "in flight" for

%% that message between the client and server - '+1' means all the

%% writes/removes add up to a single 'write', '-1' to a 'remove', and

%% '0' to nothing. (NB: the integration can never add up to more than

%% one 'write' or 'read' since clients must not write/remove a message

%% more than once without first removing/writing it).

%%

%% Maintaining this table poses two challenges: 1) both the clients

%% and the server access and update the table, which causes

%% concurrency issues, 2) we must ensure that entries do not stay in

%% the table forever, since that would constitute a memory leak. We

%% address the former by carefully modelling all operations as

%% sequences of atomic actions that produce valid results in all

%% possible interleavings. We address the latter by deleting table

%% entries whenever the server finds a 0-valued entry during the

%% processing of a write/remove. 0 is essentially equivalent to "no

%% entry". If, OTOH, the value is non-zero we know there is at least

%% one other 'write' or 'remove' in flight, so we get an opportunity

%% later to delete the table entry when processing these.

%%

%% There are two further complications. We need to ensure that 1)

%% eliminated writes still get confirmed, and 2) the write-back cache

%% doesn't grow unbounded. These are quite straightforward to

%% address. See the comments in the code.

%%

%% For notes on Clean Shutdown and startup, see documentation in

%% rabbit_variable_queue.

lucene 分为两部分:

写入

写入则是写入文件系统

查询

则是通过了 分词、排序、topk提取等过程,获取对应的docid,再通过docid 回查对应的内容

vint 是一个可变长的数组,是一个小端的变长数组,每个字节最高位置1代表后面还有(也就是最后一个字节的最高位是0)

IndexWriterConfig iwc = new IndexWriterConfig(analyzer);

iwc.setUseCompoundFile(false); // 生成多个文件

### 调试java 代码

java -agentlib:jdwp=transport=dt_socket,server=y,address=8000 -cp ./lucene-demo-9.1.0-SNAPSHOT.jar:/home/ubuntu/lucene-9.1.0/lucene/core/build/libs/lucene-core-9.1.0-SNAPSHOT.jar:/home/ubuntu/lucene-9.1.0/lucene/queryparser/build/libs/lucene-queryparser-9.1.0-SNAPSHOT.jar org.apache.lucene.demo.SearchFiles

### jdb 连接上jdk

jdb -attach 8000 -sourcepath /home/ubuntu/lucene-9.1.0/lucene/demo/src/java/

hexdump -C _0.fdt

00000000 3f d7 6c 17 1c 4c 75 63 65 6e 65 39 30 53 74 6f |?.l..Lucene90Sto|

00000010 72 65 64 46 69 65 6c 64 73 46 61 73 74 44 61 74 |redFieldsFastDat|

00000020 61 00 00 00 01 85 88 12 2b 0c 73 6b 95 30 38 76 |a.......+.sk.08v|

00000030 c9 0a 2a 52 29 00 00 0a 00 01 00 1c 02 06 03 07 |..*R)...........|

00000040 07 07 07 07 07 07 07 07 20 00 1a 60 2f 68 6f 6d |........ ..`/hom|

00000050 65 2f 60 75 62 75 6e 74 75 60 2f 64 6f 63 2f 6d |e/`ubuntu`/doc/m|

00000060 60 6f 6e 67 6f 2e 74 60 78 74 00 1a 2f 68 60 6f |`ongo.t`xt../h`o|

00000070 6d 65 2f 75 62 60 75 6e 74 75 2f 64 60 6f 63 2f |me/ub`untu/d`oc/|

00000080 68 65 6c 60 6c 6f 2e 74 78 74 c0 28 93 e8 00 00 |hel`lo.txt.(....|

00000090 00 00 00 00 00 00 c8 75 0a 41 |.......u.A|

0000009a

ubuntu@VM-0-3-ubuntu:~$ jdb -attach 8000 -sourcepath /home/ubuntu/lucene-9.1.0/lucene/demo/src/java/:/home/ubuntu/lucene-9.1.0/lucene/core/src/java/

Set uncaught java.lang.Throwable

Set deferred uncaught java.lang.Throwable

Initializing jdb ...

>

VM Started: No frames on the current call stack

main[1] stop in org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsWriter.writeField

Deferring breakpoint org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsWriter.writeField.

It will be set after the class is loaded.

main[1] cont

> Set deferred breakpoint org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsWriter.writeField

Breakpoint hit: "thread=main", org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsWriter.writeField(), line=276 bci=0

276 ++numStoredFieldsInDoc;

main[1] wheree^H^H

Unrecognized command: 'wher'. Try help...

main[1] where

[1] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsWriter.writeField (Lucene90CompressingStoredFieldsWriter.java:276)

[2] org.apache.lucene.index.StoredFieldsConsumer.writeField (StoredFieldsConsumer.java:65)

[3] org.apache.lucene.index.IndexingChain.processField (IndexingChain.java:749)

[4] org.apache.lucene.index.IndexingChain.processDocument (IndexingChain.java:620)

[5] org.apache.lucene.index.DocumentsWriterPerThread.updateDocuments (DocumentsWriterPerThread.java:241)

[6] org.apache.lucene.index.DocumentsWriter.updateDocuments (DocumentsWriter.java:432)

[7] org.apache.lucene.index.IndexWriter.updateDocuments (IndexWriter.java:1,531)

[8] org.apache.lucene.index.IndexWriter.updateDocument (IndexWriter.java:1,816)

[9] org.apache.lucene.index.IndexWriter.addDocument (IndexWriter.java:1,469)

[10] org.apache.lucene.demo.IndexFiles.indexDoc (IndexFiles.java:271)

[11] org.apache.lucene.demo.IndexFiles$1.visitFile (IndexFiles.java:212)

[12] org.apache.lucene.demo.IndexFiles$1.visitFile (IndexFiles.java:208)

[13] java.nio.file.Files.walkFileTree (Files.java:2,725)

[14] java.nio.file.Files.walkFileTree (Files.java:2,797)

[15] org.apache.lucene.demo.IndexFiles.indexDocs (IndexFiles.java:206)

[16] org.apache.lucene.demo.IndexFiles.main (IndexFiles.java:157)

main[1] list

272

273 @Override

274 public void writeField(FieldInfo info, IndexableField field) throws IOException {

275

276 => ++numStoredFieldsInDoc;

277

278 int bits = 0;

279 final BytesRef bytes;

280 final String string;

281

main[1] print field

field = "stored,indexed,omitNorms,indexOptions=DOCS<path:/home/ubuntu/doc/mongo.txt>"

main[1] print info

info = "org.apache.lucene.index.FieldInfo@32464a14"

main[1] where

[1] org.apache.lucene.index.IndexingChain$PerField.invert (IndexingChain.java:1,138)

[2] org.apache.lucene.index.IndexingChain.processField (IndexingChain.java:729)

[3] org.apache.lucene.index.IndexingChain.processDocument (IndexingChain.java:620)

[4] org.apache.lucene.index.DocumentsWriterPerThread.updateDocuments (DocumentsWriterPerThread.java:241)

[5] org.apache.lucene.index.DocumentsWriter.updateDocuments (DocumentsWriter.java:432)

[6] org.apache.lucene.index.IndexWriter.updateDocuments (IndexWriter.java:1,531)

[7] org.apache.lucene.index.IndexWriter.updateDocument (IndexWriter.java:1,816)

[8] org.apache.lucene.demo.IndexFiles.indexDoc (IndexFiles.java:277)

[9] org.apache.lucene.demo.IndexFiles$1.visitFile (IndexFiles.java:212)

[10] org.apache.lucene.demo.IndexFiles$1.visitFile (IndexFiles.java:208)

[11] java.nio.file.Files.walkFileTree (Files.java:2,725)

[12] java.nio.file.Files.walkFileTree (Files.java:2,797)

[13] org.apache.lucene.demo.IndexFiles.indexDocs (IndexFiles.java:206)

[14] org.apache.lucene.demo.IndexFiles.main (IndexFiles.java:157)

IntBlockPool intPool,

ByteBlockPool bytePool,

ByteBlockPool termBytePool,

倒排索引term 在内存中用以下内容描述:

intPool 包含三个变量:

buffers[][]int bufferUpto 描述的是二维数组 buffers[][]的第一级的偏移 , 一般都是这样用 int[] buff = buffers[bufferUpto + offset]int intUpto 描述的是整体的偏移量,描述是偏移所有的buffers 的字节数int intOffset 描述的是header buffer的偏移量那么buffers[xxx][yyy]的值又是什么呢?

这个buffers二维数组存的也是偏移量.是什么的偏移量呢?

intPool描述的是bytePool 和termBytePool 的偏移量

会将term一个个写入

main[1] where

[1] org.apache.lucene.codecs.lucene90.blocktree.Lucene90BlockTreeTermsWriter$TermsWriter.writeBlock (Lucene90BlockTreeTermsWriter.java:963)

[2] org.apache.lucene.codecs.lucene90.blocktree.Lucene90BlockTreeTermsWriter$TermsWriter.writeBlocks (Lucene90BlockTreeTermsWriter.java:709)

[3] org.apache.lucene.codecs.lucene90.blocktree.Lucene90BlockTreeTermsWriter$TermsWriter.finish (Lucene90BlockTreeTermsWriter.java:1,105)

[4] org.apache.lucene.codecs.lucene90.blocktree.Lucene90BlockTreeTermsWriter.write (Lucene90BlockTreeTermsWriter.java:370)

[5] org.apache.lucene.codecs.perfield.PerFieldPostingsFormat$FieldsWriter.write (PerFieldPostingsFormat.java:171)

[6] org.apache.lucene.index.FreqProxTermsWriter.flush (FreqProxTermsWriter.java:131)

[7] org.apache.lucene.index.IndexingChain.flush (IndexingChain.java:300)

[8] org.apache.lucene.index.DocumentsWriterPerThread.flush (DocumentsWriterPerThread.java:391)

[9] org.apache.lucene.index.DocumentsWriter.doFlush (DocumentsWriter.java:493)

[10] org.apache.lucene.index.DocumentsWriter.flushAllThreads (DocumentsWriter.java:672)

[11] org.apache.lucene.index.IndexWriter.doFlush (IndexWriter.java:4,014)

[12] org.apache.lucene.index.IndexWriter.flush (IndexWriter.java:3,988)

[13] org.apache.lucene.index.IndexWriter.shutdown (IndexWriter.java:1,321)

[14] org.apache.lucene.index.IndexWriter.close (IndexWriter.java:1,361)

[15] org.apache.lucene.demo.IndexFiles.main (IndexFiles.java:166)

main[1] where

[1] org.apache.lucene.search.TopScoreDocCollector$SimpleTopScoreDocCollector.getLeafCollector (TopScoreDocCollector.java:57)

[2] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:759)

[3] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:693)

[4] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[5] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[6] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[7] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[8] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

从terms reader 读取term

main[1] print fieldMap.get(field)

fieldMap.get(field) = "BlockTreeTerms(seg=_j terms=18,postings=20,positions=25,docs=2)"

main[1] where

[1] org.apache.lucene.codecs.lucene90.blocktree.Lucene90BlockTreeTermsReader.terms (Lucene90BlockTreeTermsReader.java:294)

[2] org.apache.lucene.codecs.perfield.PerFieldPostingsFormat$FieldsReader.terms (PerFieldPostingsFormat.java:353)

[3] org.apache.lucene.index.CodecReader.terms (CodecReader.java:114)

[4] org.apache.lucene.index.Terms.getTerms (Terms.java:41)

[5] org.apache.lucene.index.TermStates.loadTermsEnum (TermStates.java:115)

[6] org.apache.lucene.index.TermStates.build (TermStates.java:102)

[7] org.apache.lucene.search.TermQuery.createWeight (TermQuery.java:227)

[8] org.apache.lucene.search.IndexSearcher.createWeight (IndexSearcher.java:885)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:686)

[10] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[11] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[12] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[13] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

Breakpoint hit: "thread=main", org.apache.lucene.util.fst.FST.findTargetArc(), line=1,412 bci=0

1,412 if (labelToMatch == END_LABEL) {

main[1] where

[1] org.apache.lucene.util.fst.FST.findTargetArc (FST.java:1,412)

[2] org.apache.lucene.codecs.lucene90.blocktree.SegmentTermsEnum.seekExact (SegmentTermsEnum.java:511)

[3] org.apache.lucene.index.TermStates.loadTermsEnum (TermStates.java:117)

[4] org.apache.lucene.index.TermStates.build (TermStates.java:102)

[5] org.apache.lucene.search.TermQuery.createWeight (TermQuery.java:227)

[6] org.apache.lucene.search.IndexSearcher.createWeight (IndexSearcher.java:885)

[7] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:686)

[8] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[10] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[11] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

main[1] where

[1] org.apache.lucene.codecs.lucene90.blocktree.Lucene90BlockTreeTermsReader.<init> (Lucene90BlockTreeTermsReader.java:135)

[2] org.apache.lucene.codecs.lucene90.Lucene90PostingsFormat.fieldsProducer (Lucene90PostingsFormat.java:427)

[3] org.apache.lucene.codecs.perfield.PerFieldPostingsFormat$FieldsReader.<init> (PerFieldPostingsFormat.java:329)

[4] org.apache.lucene.codecs.perfield.PerFieldPostingsFormat.fieldsProducer (PerFieldPostingsFormat.java:391)

[5] org.apache.lucene.index.SegmentCoreReaders.<init> (SegmentCoreReaders.java:118)

[6] org.apache.lucene.index.SegmentReader.<init> (SegmentReader.java:91)

[7] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:94)

[8] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:77)

[9] org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run (SegmentInfos.java:809)

[10] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:109)

[11] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:67)

[12] org.apache.lucene.index.DirectoryReader.open (DirectoryReader.java:60)

[13] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:105)

获取topk的数据核心函数mergeAux,一个辅助函数获取topk的内容

Step completed: "thread=main", org.apache.lucene.search.TopDocs.mergeAux(), line=291 bci=43

291 for (int shardIDX = 0; shardIDX < shardHits.length; shardIDX++) {

main[1] where

[1] org.apache.lucene.search.TopDocs.mergeAux (TopDocs.java:291)

[2] org.apache.lucene.search.TopDocs.merge (TopDocs.java:216)

[3] org.apache.lucene.search.IndexSearcher$2.reduce (IndexSearcher.java:528)

[4] org.apache.lucene.search.IndexSearcher$2.reduce (IndexSearcher.java:505)

[5] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:694)

[6] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[7] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[8] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[9] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[10] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

docid 获取对应的文案内容main[1] where

[1] org.apache.lucene.store.ByteBufferIndexInput$SingleBufferImpl.seek (ByteBufferIndexInput.java:529)

[2] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader$BlockState.document (Lucene90CompressingStoredFieldsReader.java:594)

[3] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.document (Lucene90CompressingStoredFieldsReader.java:610)

[4] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.visitDocument (Lucene90CompressingStoredFieldsReader.java:628)

[5] org.apache.lucene.index.CodecReader.document (CodecReader.java:89)

[6] org.apache.lucene.index.BaseCompositeReader.document (BaseCompositeReader.java:154)

[7] org.apache.lucene.index.IndexReader.document (IndexReader.java:380)

[8] org.apache.lucene.search.IndexSearcher.doc (IndexSearcher.java:380)

[9] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:214)

[10] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

seek 方法通过偏移获取document,其中seek 中curBuf 是java.nio.DirectByteBufferR

525

526 @Override

527 public void seek(long pos) throws IOException {

528 try {

529 => curBuf.position((int) pos);

530 } catch (IllegalArgumentException e) {

531 if (pos < 0) {

532 throw new IllegalArgumentException("Seeking to negative position: " + this, e);

533 } else {

534 throw new EOFException("seek past EOF: " + this);

main[1] print curBuf

curBuf = "java.nio.DirectByteBufferR[pos=60 lim=154 cap=154]"

main[1] list

168

169 // NOTE: AIOOBE not EOF if you read too much

170 @Override

171 public void readBytes(byte[] b, int offset, int len) {

172 => System.arraycopy(bytes, pos, b, offset, len);

173 pos += len;

174 }

175 }

main[1] where

[1] org.apache.lucene.store.ByteArrayDataInput.readBytes (ByteArrayDataInput.java:172)

[2] org.apache.lucene.store.DataInput.readString (DataInput.java:265)

[3] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.readField (Lucene90CompressingStoredFieldsReader.java:246)

[4] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.visitDocument (Lucene90CompressingStoredFieldsReader.java:640)

[5] org.apache.lucene.index.CodecReader.document (CodecReader.java:89)

[6] org.apache.lucene.index.BaseCompositeReader.document (BaseCompositeReader.java:154)

[7] org.apache.lucene.index.IndexReader.document (IndexReader.java:380)

[8] org.apache.lucene.search.IndexSearcher.doc (IndexSearcher.java:380)

[9] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:214)

[10] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

Breakpoint hit: "thread=main", org.apache.lucene.store.ByteBufferIndexInput.setCurBuf(), line=83 bci=0

83 this.curBuf = curBuf;

main[1] where

[1] org.apache.lucene.store.ByteBufferIndexInput.setCurBuf (ByteBufferIndexInput.java:83)

[2] org.apache.lucene.store.ByteBufferIndexInput$SingleBufferImpl.<init> (ByteBufferIndexInput.java:520)

[3] org.apache.lucene.store.ByteBufferIndexInput.newInstance (ByteBufferIndexInput.java:60)

[4] org.apache.lucene.store.MMapDirectory.openInput (MMapDirectory.java:238)

[5] org.apache.lucene.store.Directory.openChecksumInput (Directory.java:152)

[6] org.apache.lucene.index.SegmentInfos.readCommit (SegmentInfos.java:297)

[7] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:88)

[8] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:77)

[9] org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run (SegmentInfos.java:809)

[10] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:109)

[11] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:67)

[12] org.apache.lucene.index.DirectoryReader.open (DirectoryReader.java:60)

[13] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:105)

java 对应的类

src\java.base\share\classes\sun\nio\ch\FileChannelImpl.java

// Creates a new mapping

private native long map0(int prot, long position, long length, boolean isSync)

throws IOException;

native 对应的c实现类

src\java.base\unix\native\libnio\ch\FileChannelImpl.c

JNIEXPORT jlong JNICALL

Java_sun_nio_ch_FileChannelImpl_map0(JNIEnv *env, jobject this,

jint prot, jlong off, jlong len, jboolean map_sync)

{

void *mapAddress = 0;

jobject fdo = (*env)->GetObjectField(env, this, chan_fd);

jint fd = fdval(env, fdo);

int protections = 0;

int flags = 0;

// should never be called with map_sync and prot == PRIVATE

assert((prot != sun_nio_ch_FileChannelImpl_MAP_PV) || !map_sync);

if (prot == sun_nio_ch_FileChannelImpl_MAP_RO) {

protections = PROT_READ;

flags = MAP_SHARED;

} else if (prot == sun_nio_ch_FileChannelImpl_MAP_RW) {

protections = PROT_WRITE | PROT_READ;

flags = MAP_SHARED;

} else if (prot == sun_nio_ch_FileChannelImpl_MAP_PV) {

protections = PROT_WRITE | PROT_READ;

flags = MAP_PRIVATE;

}

// if MAP_SYNC and MAP_SHARED_VALIDATE are not defined then it is

// best to define them here. This ensures the code compiles on old

// OS releases which do not provide the relevant headers. If run

// on the same machine then it will work if the kernel contains

// the necessary support otherwise mmap should fail with an

// invalid argument error

#ifndef MAP_SYNC

#define MAP_SYNC 0x80000

#endif

#ifndef MAP_SHARED_VALIDATE

#define MAP_SHARED_VALIDATE 0x03

#endif

if (map_sync) {

// ensure

// 1) this is Linux on AArch64, x86_64, or PPC64 LE

// 2) the mmap APIs are available at compile time

#if !defined(LINUX) || ! (defined(aarch64) || (defined(amd64) && defined(_LP64)) || defined(ppc64le))

// TODO - implement for solaris/AIX/BSD/WINDOWS and for 32 bit

JNU_ThrowInternalError(env, "should never call map on platform where MAP_SYNC is unimplemented");

return IOS_THROWN;

#else

flags |= MAP_SYNC | MAP_SHARED_VALIDATE;

#endif

}

mapAddress = mmap64(

0, /* Let OS decide location */

len, /* Number of bytes to map */

protections, /* File permissions */

flags, /* Changes are shared */

fd, /* File descriptor of mapped file */

off); /* Offset into file */

if (mapAddress == MAP_FAILED) {

if (map_sync && errno == ENOTSUP) {

JNU_ThrowIOExceptionWithLastError(env, "map with mode MAP_SYNC unsupported");

return IOS_THROWN;

}

if (errno == ENOMEM) {

JNU_ThrowOutOfMemoryError(env, "Map failed");

return IOS_THROWN;

}

return handle(env, -1, "Map failed");

}

return ((jlong) (unsigned long) mapAddress);

}

FileChannel.open 底层是一个native方法,如果是linux系统,就是mmap64

main[1] list

228

229 /** Creates an IndexInput for the file with the given name. */

230 @Override

231 public IndexInput openInput(String name, IOContext context) throws IOException {

232 => ensureOpen();

233 ensureCanRead(name);

234 Path path = directory.resolve(name);

235 try (FileChannel c = FileChannel.open(path, StandardOpenOption.READ)) {

236 final String resourceDescription = "MMapIndexInput(path=\"" + path.toString() + "\")";

237 final boolean useUnmap = getUseUnmap();

main[1] print name

name = "_j.fnm"

main[1] where

[1] org.apache.lucene.store.MMapDirectory.openInput (MMapDirectory.java:232)

[2] org.apache.lucene.store.Directory.openChecksumInput (Directory.java:152)

[3] org.apache.lucene.codecs.lucene90.Lucene90FieldInfosFormat.read (Lucene90FieldInfosFormat.java:124)

[4] org.apache.lucene.index.SegmentCoreReaders.<init> (SegmentCoreReaders.java:111)

[5] org.apache.lucene.index.SegmentReader.<init> (SegmentReader.java:91)

[6] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:94)

[7] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:77)

[8] org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run (SegmentInfos.java:809)

[9] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:109)

[10] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:67)

[11] org.apache.lucene.index.DirectoryReader.open (DirectoryReader.java:60)

[12] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:105)

mmap之后的buf在哪里会被用到呢?

和普通的文件读写类似,也就是seek后读字节

lucene\core\src\java\org\apache\lucene\store\ByteBufferIndexInput.java

@Override

public final void readBytes(byte[] b, int offset, int len) throws IOException {

try {

guard.getBytes(curBuf, b, offset, len);

} catch (

@SuppressWarnings("unused")

BufferUnderflowException e) {

int curAvail = curBuf.remaining();

while (len > curAvail) {

guard.getBytes(curBuf, b, offset, curAvail);

len -= curAvail;

offset += curAvail;

curBufIndex++;

if (curBufIndex >= buffers.length) {

throw new EOFException("read past EOF: " + this);

}

setCurBuf(buffers[curBufIndex]);

curBuf.position(0);

curAvail = curBuf.remaining();

}

guard.getBytes(curBuf, b, offset, len);

} catch (

@SuppressWarnings("unused")

NullPointerException npe) {

throw new AlreadyClosedException("Already closed: " + this);

}

}

mmap后读取数据

main[1] where

[1] jdk.internal.misc.Unsafe.copyMemory (Unsafe.java:782)

[2] java.nio.DirectByteBuffer.get (DirectByteBuffer.java:308)

[3] org.apache.lucene.store.ByteBufferGuard.getBytes (ByteBufferGuard.java:93)

[4] org.apache.lucene.store.ByteBufferIndexInput.readBytes (ByteBufferIndexInput.java:114)

[5] org.apache.lucene.store.BufferedChecksumIndexInput.readBytes (BufferedChecksumIndexInput.java:46)

[6] org.apache.lucene.store.DataInput.readString (DataInput.java:265)

[7] org.apache.lucene.codecs.CodecUtil.checkHeaderNoMagic (CodecUtil.java:202)

[8] org.apache.lucene.codecs.CodecUtil.checkHeader (CodecUtil.java:193)

[9] org.apache.lucene.codecs.CodecUtil.checkIndexHeader (CodecUtil.java:253)

[10] org.apache.lucene.codecs.lucene90.Lucene90FieldInfosFormat.read (Lucene90FieldInfosFormat.java:128)

[11] org.apache.lucene.index.SegmentCoreReaders.<init> (SegmentCoreReaders.java:111)

[12] org.apache.lucene.index.SegmentReader.<init> (SegmentReader.java:91)

[13] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:94)

[14] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:77)

[15] org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run (SegmentInfos.java:809)

[16] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:109)

[17] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:67)

[18] org.apache.lucene.index.DirectoryReader.open (DirectoryReader.java:60)

[19] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:105)

fnm 文件 由这几部分组成:

fnm 描述的field的基础信息,也可以算是metadata信息

Field names are stored in the field info file, with suffix .fnm.

FieldInfos (.fnm) --> Header,FieldsCount, <FieldName,FieldNumber, FieldBits,DocValuesBits,DocValuesGen,Attributes,DimensionCount,DimensionNumBytes> ,Footer

Data types:

Header --> IndexHeader

FieldsCount --> VInt

FieldName --> String

FieldBits, IndexOptions, DocValuesBits --> Byte

FieldNumber, DimensionCount, DimensionNumBytes --> VInt

Attributes --> Map<String,String>

DocValuesGen --> Int64

Footer --> CodecFooter

Field Descriptions:

FieldsCount: the number of fields in this file.

FieldName: name of the field as a UTF-8 String.

FieldNumber: the field's number. Note that unlike previous versions of Lucene, the fields are not numbered implicitly by their order in the file, instead explicitly.

FieldBits: a byte containing field options.

The low order bit (0x1) is one for fields that have term vectors stored, and zero for fields without term vectors.

If the second lowest order-bit is set (0x2), norms are omitted for the indexed field.

If the third lowest-order bit is set (0x4), payloads are stored for the indexed field.

IndexOptions: a byte containing index options.

0: not indexed

1: indexed as DOCS_ONLY

2: indexed as DOCS_AND_FREQS

3: indexed as DOCS_AND_FREQS_AND_POSITIONS

4: indexed as DOCS_AND_FREQS_AND_POSITIONS_AND_OFFSETS

DocValuesBits: a byte containing per-document value types. The type recorded as two four-bit integers, with the high-order bits representing norms options, and the low-order bits representing DocValues options. Each four-bit integer can be decoded as such:

0: no DocValues for this field.

1: NumericDocValues. (DocValuesType.NUMERIC)

2: BinaryDocValues. (DocValuesType#BINARY)

3: SortedDocValues. (DocValuesType#SORTED)

DocValuesGen is the generation count of the field's DocValues. If this is -1, there are no DocValues updates to that field. Anything above zero means there are updates stored by DocValuesFormat.

Attributes: a key-value map of codec-private attributes.

PointDimensionCount, PointNumBytes: these are non-zero only if the field is indexed as points, e.g. using LongPoint

VectorDimension: it is non-zero if the field is indexed as vectors.

VectorSimilarityFunction: a byte containing distance function used for similarity calculation.

0: EUCLIDEAN distance. (VectorSimilarityFunction.EUCLIDEAN)

1: DOT_PRODUCT similarity. (VectorSimilarityFunction.DOT_PRODUCT)

2: COSINE similarity. (VectorSimilarityFunction.COSINE)

文件路径: lucene\backward-codecs\src\java\org\apache\lucene\backward_codecs\lucene50\Lucene50CompoundFormat.java

没有找到90的版本的fdt格式,只有2.9.4的,将就使用fdt格式

main[1] print fieldsStreamFN

fieldsStreamFN = "_j.fdt"

main[1] list

124 numDocs = si.maxDoc();

125

126 final String fieldsStreamFN =

127 IndexFileNames.segmentFileName(segment, segmentSuffix, FIELDS_EXTENSION);

128 => ChecksumIndexInput metaIn = null;

129 try {

130 // Open the data file

131 fieldsStream = d.openInput(fieldsStreamFN, context);

132 version =

133 CodecUtil.checkIndexHeader(

main[1] where

[1] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.<init> (Lucene90CompressingStoredFieldsReader.java:128)

[2] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsFormat.fieldsReader (Lucene90CompressingStoredFieldsFormat.java:133)

[3] org.apache.lucene.codecs.lucene90.Lucene90StoredFieldsFormat.fieldsReader (Lucene90StoredFieldsFormat.java:136)

[4] org.apache.lucene.index.SegmentCoreReaders.<init> (SegmentCoreReaders.java:138)

[5] org.apache.lucene.index.SegmentReader.<init> (SegmentReader.java:91)

[6] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:94)

[7] org.apache.lucene.index.StandardDirectoryReader$1.doBody (StandardDirectoryReader.java:77)

[8] org.apache.lucene.index.SegmentInfos$FindSegmentsFile.run (SegmentInfos.java:809)

[9] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:109)

[10] org.apache.lucene.index.StandardDirectoryReader.open (StandardDirectoryReader.java:67)

[11] org.apache.lucene.index.DirectoryReader.open (DirectoryReader.java:60)

[12] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:105)

整个流程是通过docid 获取document 的内容

@Override

public void visitDocument(int docID, StoredFieldVisitor visitor) throws IOException {

final SerializedDocument doc = document(docID); // 通过docID 获取doc对象

for (int fieldIDX = 0; fieldIDX < doc.numStoredFields; fieldIDX++) {

final long infoAndBits = doc.in.readVLong();

final int fieldNumber = (int) (infoAndBits >>> TYPE_BITS);

final FieldInfo fieldInfo = fieldInfos.fieldInfo(fieldNumber);

final int bits = (int) (infoAndBits & TYPE_MASK);

assert bits <= NUMERIC_DOUBLE : "bits=" + Integer.toHexString(bits);

switch (visitor.needsField(fieldInfo)) {

case YES:

readField(doc.in, visitor, fieldInfo, bits); // 通过input , 也就是input 绑定的fd ,去读mmap64 映射的文件 ,在这里会读取后缀名为 .fdt 的文件

break;

...

}

}

}

main[1] where

[1] org.apache.lucene.document.Document.add (Document.java:60)

[2] org.apache.lucene.document.DocumentStoredFieldVisitor.stringField (DocumentStoredFieldVisitor.java:74)

[3] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.readField (Lucene90CompressingStoredFieldsReader.java:246)

[4] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.visitDocument (Lucene90CompressingStoredFieldsReader.java:640)

[5] org.apache.lucene.index.CodecReader.document (CodecReader.java:89)

[6] org.apache.lucene.index.BaseCompositeReader.document (BaseCompositeReader.java:154)

[7] org.apache.lucene.index.IndexReader.document (IndexReader.java:380)

[8] org.apache.lucene.search.IndexSearcher.doc (IndexSearcher.java:380)

[9] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:214)

[10] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

首先入口在这里:

org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.document

Lucene90CompressingStoredFieldsReader的document 方法

main[1] where

[1] org.apache.lucene.store.ByteBufferIndexInput$SingleBufferImpl.seek (ByteBufferIndexInput.java:529)

[2] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.document (Lucene90CompressingStoredFieldsReader.java:606)

[3] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.visitDocument (Lucene90CompressingStoredFieldsReader.java:628)

[4] org.apache.lucene.index.CodecReader.document (CodecReader.java:89)

[5] org.apache.lucene.index.BaseCompositeReader.document (BaseCompositeReader.java:154)

[6] org.apache.lucene.index.IndexReader.document (IndexReader.java:380)

[7] org.apache.lucene.search.IndexSearcher.doc (IndexSearcher.java:380)

[8] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:214)

[9] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

SerializedDocument document(int docID) throws IOException {

if (state.contains(docID) == false) {

fieldsStream.seek(indexReader.getStartPointer(docID)); // 通过mmap64 偏移

state.reset(docID);

}

assert state.contains(docID);

return state.document(docID); // 再看具体的实现 , 这个state 对象对应的类是一��个静态内部类

}

下面看看静态内部类的实现

/**

* Get the serialized representation of the given docID. This docID has to be contained in the

* current block.

*/

SerializedDocument document(int docID) throws IOException {

if (contains(docID) == false) {

throw new IllegalArgumentException();

}

final int index = docID - docBase;

final int offset = Math.toIntExact(offsets[index]);

final int length = Math.toIntExact(offsets[index + 1]) - offset;

final int totalLength = Math.toIntExact(offsets[chunkDocs]);

final int numStoredFields = Math.toIntExact(this.numStoredFields[index]);

final BytesRef bytes;

if (merging) {

bytes = this.bytes;

} else {

bytes = new BytesRef();

}

final DataInput documentInput;

if (length == 0) {

...

} else {

fieldsStream.seek(startPointer); // seek mmap64 偏移量获取文件

decompressor.decompress(fieldsStream, totalLength, offset, length, bytes); // 解压对应的数据

assert bytes.length == length;

documentInput = new ByteArrayDataInput(bytes.bytes, bytes.offset, bytes.length); // 将数据塞入bytes

}

return new SerializedDocument(documentInput, length, numStoredFields); // 构建SerializedDocument

}

}

下面具体描述加载内容的过程:

pos = 4

main[1] dump bytes

bytes = {

120, 116, 0, 26, 47, 104, 111, 109, 101, 47, 117, 98, 117, 110, 116, 117, 47, 100, 111, 99, 47, 104, 101, 108, 108, 111, 46, 116, 120, 116, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0

}

main[1] print in

in = "MMapIndexInput(path="/home/ubuntu/index/_j.fdt")"

main[1] where

[1] org.apache.lucene.codecs.lucene90.LZ4WithPresetDictCompressionMode$LZ4WithPresetDictDecompressor.decompress (LZ4WithPresetDictCompressionMode.java:88)

[2] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader$BlockState.document (Lucene90CompressingStoredFieldsReader.java:595)

[3] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.document (Lucene90CompressingStoredFieldsReader.java:610)

[4] org.apache.lucene.codecs.lucene90.compressing.Lucene90CompressingStoredFieldsReader.visitDocument (Lucene90CompressingStoredFieldsReader.java:628)

[5] org.apache.lucene.index.CodecReader.document (CodecReader.java:89)

[6] org.apache.lucene.index.BaseCompositeReader.document (BaseCompositeReader.java:154)

[7] org.apache.lucene.index.IndexReader.document (IndexReader.java:380)

[8] org.apache.lucene.search.IndexSearcher.doc (IndexSearcher.java:380)

[9] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:214)

[10] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

public SegmentTermsEnum(FieldReader fr) throws IOException {

this.fr = fr;

// if (DEBUG) {

// System.out.println("BTTR.init seg=" + fr.parent.segment);

// }

stack = new SegmentTermsEnumFrame[0];

// Used to hold seek by TermState, or cached seek

staticFrame = new SegmentTermsEnumFrame(this, -1);

if (fr.index == null) {

fstReader = null;

} else {

fstReader = fr.index.getBytesReader();

}

// Init w/ root block; don't use index since it may

// not (and need not) have been loaded

for (int arcIdx = 0; arcIdx < arcs.length; arcIdx++) {

arcs[arcIdx] = new FST.Arc<>();

}

currentFrame = staticFrame;

final FST.Arc<BytesRef> arc;

if (fr.index != null) {

arc = fr.index.getFirstArc(arcs[0]);

// Empty string prefix must have an output in the index!

assert arc.isFinal();

} else {

arc = null;

}

// currentFrame = pushFrame(arc, rootCode, 0);

// currentFrame.loadBlock();

validIndexPrefix = 0;

// if (DEBUG) {

// System.out.println("init frame state " + currentFrame.ord);

// printSeekState();

// }

// System.out.println();

// computeBlockStats().print(System.out);

}

解析获取getArc

private FST.Arc<BytesRef> getArc(int ord) {

if (ord >= arcs.length) {

@SuppressWarnings({"rawtypes", "unchecked"})

final FST.Arc<BytesRef>[] next =

new FST.Arc[ArrayUtil.oversize(1 + ord, RamUsageEstimator.NUM_BYTES_OBJECT_REF)];

System.arraycopy(arcs, 0, next, 0, arcs.length);

for (int arcOrd = arcs.length; arcOrd < next.length; arcOrd++) {

next[arcOrd] = new FST.Arc<>();

}

arcs = next;

}

return arcs[ord];

}

Breakpoint hit: "thread=main", org.apache.lucene.codecs.lucene90.blocktree.SegmentTermsEnum.getArc(), line=222 bci=0

222 if (ord >= arcs.length) {

main[1] where

[1] org.apache.lucene.codecs.lucene90.blocktree.SegmentTermsEnum.getArc (SegmentTermsEnum.java:222)

[2] org.apache.lucene.codecs.lucene90.blocktree.SegmentTermsEnum.seekExact (SegmentTermsEnum.java:511)

[3] org.apache.lucene.index.TermStates.loadTermsEnum (TermStates.java:117)

[4] org.apache.lucene.index.TermStates.build (TermStates.java:102)

[5] org.apache.lucene.search.TermQuery.createWeight (TermQuery.java:227)

[6] org.apache.lucene.search.IndexSearcher.createWeight (IndexSearcher.java:885)

[7] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:686)

[8] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[10] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[11] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

main[1] where

[1] org.apache.lucene.search.Weight$DefaultBulkScorer.scoreAll (Weight.java:300)

[2] org.apache.lucene.search.Weight$DefaultBulkScorer.score (Weight.java:247)

[3] org.apache.lucene.search.BulkScorer.score (BulkScorer.java:38)

[4] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:770)

[5] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:693)

[6] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[7] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[8] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[9] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[10] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

main[1] list

296 DocIdSetIterator iterator,

297 TwoPhaseIterator twoPhase,

298 Bits acceptDocs)

299 throws IOException {

300 => if (twoPhase == null) {

301 for (int doc = iterator.nextDoc();

302 doc != DocIdSetIterator.NO_MORE_DOCS;

303 doc = iterator.nextDoc()) {

304 if (acceptDocs == null || acceptDocs.get(doc)) {

305 collector.collect(doc);

main[1] print iterator

iterator = "org.apache.lucene.search.ImpactsDISI@6279cee3"

main[1] list

494 @Override

495 public int advance(int target) throws IOException {

496 // current skip docID < docIDs generated from current buffer <= next skip docID

497 // we don't need to skip if target is buffered already

498 => if (docFreq > BLOCK_SIZE && target > nextSkipDoc) {

499

500 if (skipper == null) {

501 // Lazy init: first time this enum has ever been used for skipping

502 skipper =

503 new Lucene90SkipReader(

main[1] where

[1] org.apache.lucene.codecs.lucene90.Lucene90PostingsReader$BlockDocsEnum.advance (Lucene90PostingsReader.java:498)

[2] org.apache.lucene.index.SlowImpactsEnum.advance (SlowImpactsEnum.java:77)

[3] org.apache.lucene.search.ImpactsDISI.advance (ImpactsDISI.java:135)

[4] org.apache.lucene.search.ImpactsDISI.nextDoc (ImpactsDISI.java:140)

[5] org.apache.lucene.search.Weight$DefaultBulkScorer.scoreAll (Weight.java:301)

[6] org.apache.lucene.search.Weight$DefaultBulkScorer.score (Weight.java:247)

[7] org.apache.lucene.search.BulkScorer.score (BulkScorer.java:38)

[8] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:770)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:693)

[10] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[11] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[12] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[13] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[14] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

生成iterator 的相关类 , 对应的是SegmentTermsEnum

main[1] where

[1] org.apache.lucene.search.TermQuery$TermWeight.getTermsEnum (TermQuery.java:145)

[2] org.apache.lucene.search.TermQuery$TermWeight.scorer (TermQuery.java:107)

[3] org.apache.lucene.search.Weight.bulkScorer (Weight.java:166)

[4] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:767)

[5] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:693)

[6] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[7] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[8] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[9] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[10] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

main[1] print termsEnum

termsEnum = "org.apache.lucene.codecs.lucene90.blocktree.SegmentTermsEnum@1a84f40f"

getTermsEnum 方法能拿到term的统计位置偏移,SegmentTermsEnum 不包含dociterator

main[1] where

[1] org.apache.lucene.index.Term.bytes (Term.java:128)

[2] org.apache.lucene.search.TermQuery$TermWeight.getTermsEnum (TermQuery.java:145)

[3] org.apache.lucene.search.TermQuery$TermWeight.scorer (TermQuery.java:107)

[4] org.apache.lucene.search.Weight.bulkScorer (Weight.java:166)

[5] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:767)

[6] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:693)

[7] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[8] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[10] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[11] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

144 final TermsEnum termsEnum = context.reader().terms(term.field()).iterator();

145 => termsEnum.seekExact(term.bytes(), state);

146 return termsEnum;

147 }

这里的term.bytes() 就是我们的搜索值 , 所以term对应的倒排信息是从这里开始读的(还没看完,暂时那么定)

读出倒排信息之后,开始排序. score 有iteration 可以遍历所有doc_id

main[1] list

348 // (needsFreq=false)

349 private boolean isFreqsRead;

350 private int singletonDocID; // docid when there is a single pulsed posting, otherwise -1

351

352 => public BlockDocsEnum(FieldInfo fieldInfo) throws IOException {

353 this.startDocIn = Lucene90PostingsReader.this.docIn;

354 this.docIn = null;

355 indexHasFreq = fieldInfo.getIndexOptions().compareTo(IndexOptions.DOCS_AND_FREQS) >= 0;

356 indexHasPos =

357 fieldInfo.getIndexOptions().compareTo(IndexOptions.DOCS_AND_FREQS_AND_POSITIONS) >= 0;

main[1] where

[1] org.apache.lucene.codecs.lucene90.Lucene90PostingsReader$BlockDocsEnum.<init> (Lucene90PostingsReader.java:352)

[2] org.apache.lucene.codecs.lucene90.Lucene90PostingsReader.postings (Lucene90PostingsReader.java:258)

[3] org.apache.lucene.codecs.lucene90.Lucene90PostingsReader.impacts (Lucene90PostingsReader.java:280)

[4] org.apache.lucene.codecs.lucene90.blocktree.SegmentTermsEnum.impacts (SegmentTermsEnum.java:1,150)

[5] org.apache.lucene.search.TermQuery$TermWeight.scorer (TermQuery.java:114)

[6] org.apache.lucene.search.Weight.bulkScorer (Weight.java:166)

[7] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:767)

[8] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:693)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[10] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[11] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[12] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[13] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

Breakpoint hit: "thread=main", org.apache.lucene.search.TopDocsCollector.populateResults(), line=64 bci=0

64 for (int i = howMany - 1; i >= 0; i--) {

main[1] where

[1] org.apache.lucene.search.TopDocsCollector.populateResults (TopDocsCollector.java:64)

[2] org.apache.lucene.search.TopDocsCollector.topDocs (TopDocsCollector.java:166)

[3] org.apache.lucene.search.TopDocsCollector.topDocs (TopDocsCollector.java:98)

[4] org.apache.lucene.search.IndexSearcher$2.reduce (IndexSearcher.java:526)

[5] org.apache.lucene.search.IndexSearcher$2.reduce (IndexSearcher.java:505)

[6] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:694)

[7] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[8] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[10] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[11] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

main[1]

main[1] dump collector

collector = {

org.apache.lucene.search.TopScoreDocCollector.docBase: 0

org.apache.lucene.search.TopScoreDocCollector.pqTop: instance of org.apache.lucene.search.ScoreDoc(id=1529)

org.apache.lucene.search.TopScoreDocCollector.hitsThresholdChecker: instance of org.apache.lucene.search.HitsThresholdChecker$LocalHitsThresholdChecker(id=1530)

org.apache.lucene.search.TopScoreDocCollector.minScoreAcc: null

org.apache.lucene.search.TopScoreDocCollector.minCompetitiveScore: 0.0

org.apache.lucene.search.TopScoreDocCollector.$assertionsDisabled: true

org.apache.lucene.search.TopDocsCollector.EMPTY_TOPDOCS: instance of org.apache.lucene.search.TopDocs(id=1531)

org.apache.lucene.search.TopDocsCollector.pq: instance of org.apache.lucene.search.HitQueue(id=1532)

org.apache.lucene.search.TopDocsCollector.totalHits: 0

org.apache.lucene.search.TopDocsCollector.totalHitsRelation: instance of org.apache.lucene.search.TotalHits$Relation(id=1533)

}

main[1] print collector

collector = "org.apache.lucene.search.TopScoreDocCollector$SimpleTopScoreDocCollector@62bd765"

690 private <C extends Collector, T> T search(

691 Weight weight, CollectorManager<C, T> collectorManager, C firstCollector) throws IOException {

692 if (executor == null || leafSlices.length <= 1) {

693 search(leafContexts, weight, firstCollector);

694 => return collectorManager.reduce(Collections.singletonList(firstCollector));

695 } else {

696 final List<C> collectors = new ArrayList<>(leafSlices.length);

697 collectors.add(firstCollector);

698 final ScoreMode scoreMode = firstCollector.scoreMode();

699 for (int i = 1; i < leafSlices.length; ++i) {

main[1] where

[1] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:694)

[2] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[3] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[4] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[5] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[6] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

在org.apache.lucene.search.TopScoreDocCollector.create , 一直往上翻,发现org.apache.lucene.search.IndexSearcher.searchAfter 就已经有了.

那么这个hit数量是从哪里初始化的呢?

很明显,search会填充firstCollector的数据,那么是在哪里赋值的呢?

protected void search(List<LeafReaderContext> leaves, Weight weight, Collector collector)

throws IOException {

// TODO: should we make this

// threaded...? the Collector could be sync'd?

// always use single thread:

for (LeafReaderContext ctx : leaves) { // search each subreader

final LeafCollector leafCollector;

try {

leafCollector = collector.getLeafCollector(ctx);

} catch (

@SuppressWarnings("unused")

CollectionTerminatedException e) {

// there is no doc of interest in this reader context

// continue with the following leaf

continue;

}

BulkScorer scorer = weight.bulkScorer(ctx); /// 在这里会获取total hits

if (scorer != null) {

try {

scorer.score(leafCollector, ctx.reader().getLiveDocs());

} catch (

@SuppressWarnings("unused")

CollectionTerminatedException e) {

// collection was terminated prematurely

// continue with the following leaf

}

}

}

}

看完最后的堆栈,我们确定了totalHits 是在这里赋值的 , 也就是只要调用了一次就自增一, 很明显这是一个统计,那么这个统计就是命中的搜索内容,那么搜索内容是怎么来的呢?

我们只能往上追溯

main[1] where

[1] org.apache.lucene.search.TopScoreDocCollector$SimpleTopScoreDocCollector$1.collect (TopScoreDocCollector.java:73)

[2] org.apache.lucene.search.Weight$DefaultBulkScorer.scoreAll (Weight.java:305)

[3] org.apache.lucene.search.Weight$DefaultBulkScorer.score (Weight.java:247)

[4] org.apache.lucene.search.BulkScorer.score (BulkScorer.java:38)

[5] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:770)

[6] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:693)

[7] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[8] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[10] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[11] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

@Override

public void collect(int doc) throws IOException {

float score = scorer.score(); // 计算分数 , 也是回调专用的score 函数 , 插件化

// This collector relies on the fact that scorers produce positive values:

assert score >= 0; // NOTE: false for NaN

totalHits++; // hit +1 在这里触发

hitsThresholdChecker.incrementHitCount();

if (minScoreAcc != null && (totalHits & minScoreAcc.modInterval) == 0) {

updateGlobalMinCompetitiveScore(scorer);

}

if (score <= pqTop.score) {

if (totalHitsRelation == TotalHits.Relation.EQUAL_TO) {

// we just reached totalHitsThreshold, we can start setting the min

// competitive score now

updateMinCompetitiveScore(scorer);

}

// Since docs are returned in-order (i.e., increasing doc Id), a document

// with equal score to pqTop.score cannot compete since HitQueue favors

// documents with lower doc Ids. Therefore reject those docs too.

return;

}

pqTop.doc = doc + docBase;

pqTop.score = score;

pqTop = pq.updateTop();

updateMinCompetitiveScore(scorer);

}

};

继续往上面推之后,我们找到了堆栈,scorer 是根据context生成的

/**

* Optional method, to return a {@link BulkScorer} to score the query and send hits to a {@link

* Collector}. Only queries that have a different top-level approach need to override this; the

* default implementation pulls a normal {@link Scorer} and iterates and collects the resulting

* hits which are not marked as deleted.

*

* @param context the {@link org.apache.lucene.index.LeafReaderContext} for which to return the

* {@link Scorer}.

* @return a {@link BulkScorer} which scores documents and passes them to a collector.

* @throws IOException if there is a low-level I/O error

*/

public BulkScorer bulkScorer(LeafReaderContext context) throws IOException {

Scorer scorer = scorer(context);

if (scorer == null) {

// No docs match

return null;

}

// This impl always scores docs in order, so we can

// ignore scoreDocsInOrder:

return new DefaultBulkScorer(scorer);

}

再往上看: 刚刚看到了bulkScorer 回调了一个scorer 方法,这个scorer抽象方法的实现是在org.apache.lucene.search.TermQuery$TermWeight.scorer

这个scorer方法根据入参context 以及外部类termQuery.term计算htis命中的个数

main[1] list

103 assert termStates == null || termStates.wasBuiltFor(ReaderUtil.getTopLevelContext(context))

104 : "The top-reader used to create Weight is not the same as the current reader's top-reader ("

105 + ReaderUtil.getTopLevelContext(context);

106 ;

107 => final TermsEnum termsEnum = getTermsEnum(context);

108 if (termsEnum == null) {

109 return null;

110 }

111 LeafSimScorer scorer =

112 new LeafSimScorer(simScorer, context.reader(), term.field(), scoreMode.needsScores()); // 这里term是外部类的term ,也就是this$0.term

main[1] where

[1] org.apache.lucene.search.TermQuery$TermWeight.scorer (TermQuery.java:107)

[2] org.apache.lucene.search.Weight.bulkScorer (Weight.java:166)

[3] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:767)

[4] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:693)

[5] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:687)

[6] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[7] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[8] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[9] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

所以之后会调用advance,最后调用的是下面这个advance方法, 这里会用到docTermStartFP , 那么这个遍历在哪里初始化?

其实是在termStates里面获取,初始化的地方在docTermStartFP = termState.docStartFP;

lucene\core\src\java\org\apache\lucene\codecs\lucene90\Lucene90PostingsReader.java

@Override

public int advance(int target) throws IOException {

// current skip docID < docIDs generated from current buffer <= next skip docID

// we don't need to skip if target is buffered already

if (docFreq > BLOCK_SIZE && target > nextSkipDoc) {

if (skipper == null) {

// Lazy init: first time this enum has ever been used for skipping

skipper =

new Lucene90SkipReader(

docIn.clone(), MAX_SKIP_LEVELS, indexHasPos, indexHasOffsets, indexHasPayloads);

}

if (!skipped) {

assert skipOffset != -1;

// This is the first time this enum has skipped

// since reset() was called; load the skip data:

skipper.init(docTermStartFP + skipOffset, docTermStartFP, 0, 0, docFreq);

skipped = true;

}

// always plus one to fix the result, since skip position in Lucene90SkipReader

// is a little different from MultiLevelSkipListReader

final int newDocUpto = skipper.skipTo(target) + 1;

if (newDocUpto >= blockUpto) {

// Skipper moved

assert newDocUpto % BLOCK_SIZE == 0 : "got " + newDocUpto;

blockUpto = newDocUpto;

// Force to read next block

docBufferUpto = BLOCK_SIZE;

accum = skipper.getDoc(); // actually, this is just lastSkipEntry

docIn.seek(skipper.getDocPointer()); // now point to the block we want to search

// even if freqBuffer were not read from the previous block, we will mark them as read,

// as we don't need to skip the previous block freqBuffer in refillDocs,

// as we have already positioned docIn where in needs to be.

isFreqsRead = true;

}

// next time we call advance, this is used to

// foresee whether skipper is necessary.

nextSkipDoc = skipper.getNextSkipDoc();

}

if (docBufferUpto == BLOCK_SIZE) {

refillDocs();

}

// Now scan... this is an inlined/pared down version

// of nextDoc():

long doc;

while (true) {

doc = docBuffer[docBufferUpto];

if (doc >= target) {

break;

}

++docBufferUpto;

}

docBufferUpto++;

return this.doc = (int) doc;

}

@Override

public long cost() {

return docFreq;

}

}

那么我们继续看termStates是怎么初始化的? 我先猜测term会是termStates 的一个成员变量

通过断点,我们最后找到了下面这个:

main[1] list

178 }

179

180 @Override

181 public BlockTermState newTermState() {

182 => return new IntBlockTermState();

183 }

184

185 @Override

186 public void close() throws IOException {

187 IOUtils.close(docIn, posIn, payIn);

main[1] where

[1] org.apache.lucene.codecs.lucene90.Lucene90PostingsReader.newTermState (Lucene90PostingsReader.java:182)

[2] org.apache.lucene.codecs.lucene90.blocktree.SegmentTermsEnumFrame.<init> (SegmentTermsEnumFrame.java:101)

[3] org.apache.lucene.codecs.lucene90.blocktree.SegmentTermsEnum.<init> (SegmentTermsEnum.java:76)

[4] org.apache.lucene.codecs.lucene90.blocktree.FieldReader.iterator (FieldReader.java:153)

[5] org.apache.lucene.index.TermStates.loadTermsEnum (TermStates.java:116)

[6] org.apache.lucene.index.TermStates.build (TermStates.java:102)

[7] org.apache.lucene.search.TermQuery.createWeight (TermQuery.java:227)

[8] org.apache.lucene.search.IndexSearcher.createWeight (IndexSearcher.java:885)

[9] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:686)

[10] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[11] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[12] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[13] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

main[1]

最后这里应该就是最最核心的获取词的流程了,i hope so

main[1] list

113

114 private static TermsEnum loadTermsEnum(LeafReaderContext ctx, Term term) throws IOException {

115 final Terms terms = Terms.getTerms(ctx.reader(), term.field());

116 final TermsEnum termsEnum = terms.iterator();

117 => if (termsEnum.seekExact(term.bytes())) {

118 return termsEnum;

119 }

120 return null;

121 }

122

main[1] print term.bytes()

term.bytes() = "[61 6d]"

main[1] where

[1] org.apache.lucene.index.TermStates.loadTermsEnum (TermStates.java:117)

[2] org.apache.lucene.index.TermStates.build (TermStates.java:102)

[3] org.apache.lucene.search.TermQuery.createWeight (TermQuery.java:227)

[4] org.apache.lucene.search.IndexSearcher.createWeight (IndexSearcher.java:885)

[5] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:686)

[6] org.apache.lucene.search.IndexSearcher.searchAfter (IndexSearcher.java:532)

[7] org.apache.lucene.search.IndexSearcher.search (IndexSearcher.java:542)

[8] org.apache.lucene.demo.SearchFiles.doPagingSearch (SearchFiles.java:180)

[9] org.apache.lucene.demo.SearchFiles.main (SearchFiles.java:150)

最后的最后应该是调用这里: 获取所有的term的个数,具体是哪里还需要判断,但是路径应该就是这里了

// Target's prefix matches this block's prefix; we

// scan the entries check if the suffix matches.

public SeekStatus scanToTermLeaf(BytesRef target, boolean exactOnly) throws IOException {

// if (DEBUG) System.out.println(" scanToTermLeaf: block fp=" + fp + " prefix=" + prefix + "

// nextEnt=" + nextEnt + " (of " + entCount + ") target=" + brToString(target) + " term=" +

// brToString(term));

assert nextEnt != -1;

ste.termExists = true;

subCode = 0;

if (nextEnt == entCount) {

if (exactOnly) {

fillTerm();

}

return SeekStatus.END;

}

assert prefixMatches(target);

// TODO: binary search when all terms have the same length, which is common for ID fields,

// which are also the most sensitive to lookup performance?

// Loop over each entry (term or sub-block) in this block:

do {

nextEnt++;

suffix = suffixLengthsReader.readVInt();

// if (DEBUG) {

// BytesRef suffixBytesRef = new BytesRef();

// suffixBytesRef.bytes = suffixBytes;

// suffixBytesRef.offset = suffixesReader.getPosition();

// suffixBytesRef.length = suffix;

// System.out.println(" cycle: term " + (nextEnt-1) + " (of " + entCount + ") suffix="

// + brToString(suffixBytesRef));

// }

startBytePos = suffixesReader.getPosition();

suffixesReader.skipBytes(suffix);

// Loop over bytes in the suffix, comparing to the target

final int cmp =

Arrays.compareUnsigned(

suffixBytes,

startBytePos,

startBytePos + suffix,

target.bytes,

target.offset + prefix,

target.offset + target.length);

if (cmp < 0) {